合 Prometheus监控报错 context deadline exceeded

Tags: 故障处理监控Prometheus

现象

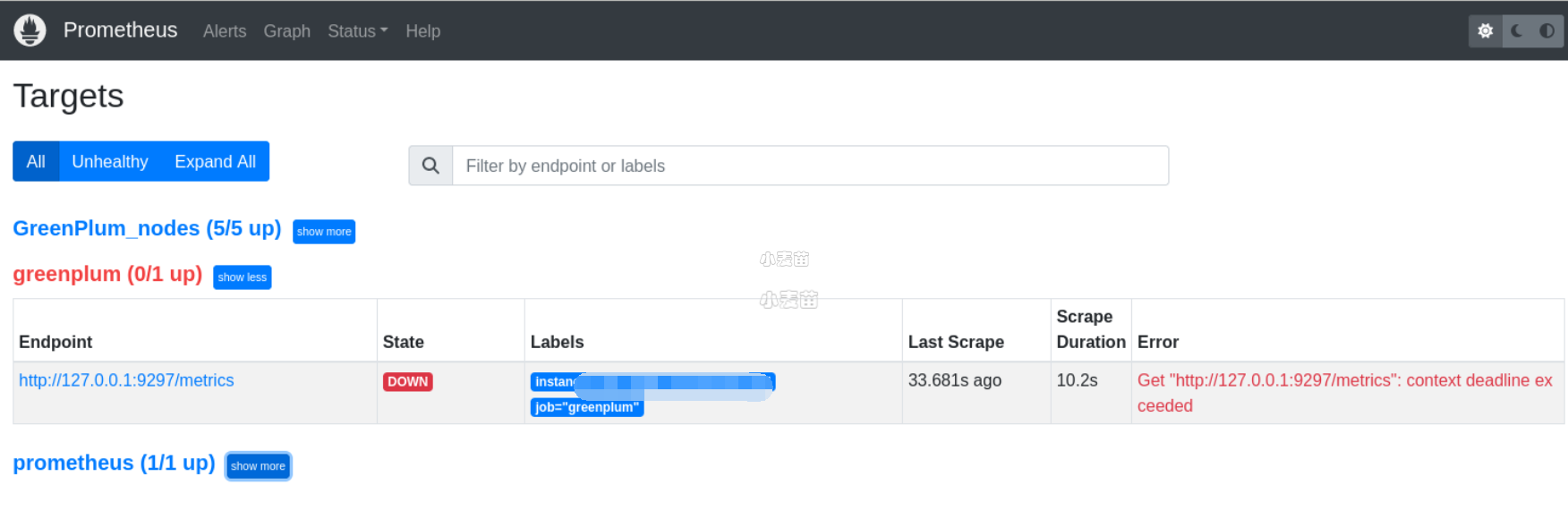

一个GreenPlum的Prometheus报错:

1 | Get "http://127.0.0.1:9297/metrics": context deadline exceeded |

同时,Grafana也不显示监控结果。

检查greenplum_exporter会有错误输出:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@mdw1 ~]# systemctl status greenplum_exporter.service ● greenplum_exporter.service - greenplum exporter Loaded: loaded (/etc/systemd/system/greenplum_exporter.service; enabled; vendor preset: disabled) Active: active (running) since Mon 2023-01-30 14:05:27 CST; 1 day 6h ago Main PID: 97507 (greenplum_expor) Tasks: 38 CGroup: /system.slice/greenplum_exporter.service └─97507 /usr/local/greenplum_exporter/bin/greenplum_exporter --log.level=error Jan 30 14:05:27 mdw1 systemd[1]: Started greenplum exporter. Jan 30 14:44:41 mdw1 greenplum_exporter[97507]: time="2023-01-30T14:44:41+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement ...ctor.go:100" Jan 30 22:46:20 mdw1 greenplum_exporter[97507]: time="2023-01-30T22:46:20+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement ...ctor.go:100" Jan 30 22:49:36 mdw1 greenplum_exporter[97507]: time="2023-01-30T22:49:36+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement ...ctor.go:100" Jan 31 15:33:41 mdw1 greenplum_exporter[97507]: time="2023-01-31T15:33:41+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement ...ctor.go:100" Jan 31 16:57:43 mdw1 greenplum_exporter[97507]: time="2023-01-31T16:57:43+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:context deadline e...ctor.go:100" Jan 31 19:58:06 mdw1 greenplum_exporter[97507]: time="2023-01-31T19:58:06+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:pq: canceling stat...ctor.go:100" Hint: Some lines were ellipsized, use -l to show in full. |

日志如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | [root@mdw1 greenplum_exporter]# journalctl -r | grep greenplum_exporter | head -n 10 Jan 29 22:56:18 mdw1 greenplum_exporter[211676]: time="2023-01-29T22:56:18+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 29 21:08:11 mdw1 greenplum_exporter[211676]: time="2023-01-29T21:08:11+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:context deadline exceeded" source="collector.go:100" Jan 29 17:58:00 mdw1 greenplum_exporter[211676]: time="2023-01-29T17:58:00+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:pq: interconnect error: connection closed prematurely; pq: failed to acquire resources on one or more segments" source="collector.go:100" Jan 28 23:15:55 mdw1 greenplum_exporter[211676]: time="2023-01-28T23:15:55+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 28 20:51:26 mdw1 greenplum_exporter[211676]: time="2023-01-28T20:51:26+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 28 19:56:41 mdw1 greenplum_exporter[211676]: time="2023-01-28T19:56:41+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:context deadline exceeded" source="collector.go:100" Jan 28 19:02:54 mdw1 greenplum_exporter[211676]: time="2023-01-28T19:02:54+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 28 18:05:13 mdw1 greenplum_exporter[211676]: time="2023-01-28T18:05:13+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 28 18:04:24 mdw1 greenplum_exporter[211676]: time="2023-01-28T18:04:24+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" Jan 28 15:46:01 mdw1 greenplum_exporter[211676]: time="2023-01-28T15:46:01+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user request" source="collector.go:100" [root@mdw1 greenplum_exporter]# journalctl -u greenplum_exporter --since "2023-01-28" -r -- Logs begin at Mon 2022-12-12 18:14:04 CST, end at Mon 2023-01-30 14:10:01 CST. -- Jan 30 14:05:27 mdw1 systemd[1]: Started greenplum exporter. Jan 30 14:05:27 mdw1 systemd[1]: Stopped greenplum exporter. Jan 30 14:05:27 mdw1 systemd[1]: Stopping greenplum exporter... Jan 30 12:42:30 mdw1 systemd[1]: Started greenplum exporter. Jan 30 12:42:17 mdw1 systemd[1]: Stopped greenplum exporter. Jan 30 12:42:17 mdw1 systemd[1]: Stopping greenplum exporter... Jan 29 22:56:18 mdw1 greenplum_exporter[211676]: time="2023-01-29T22:56:18+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Jan 29 21:08:11 mdw1 greenplum_exporter[211676]: time="2023-01-29T21:08:11+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:context deadline exceeded" source= Jan 29 17:58:00 mdw1 greenplum_exporter[211676]: time="2023-01-29T17:58:00+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:pq: interconnect error: connection Jan 28 23:15:55 mdw1 greenplum_exporter[211676]: time="2023-01-28T23:15:55+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:pq: canceling statement due to use Jan 28 20:51:26 mdw1 greenplum_exporter[211676]: time="2023-01-28T20:51:26+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Jan 28 19:56:41 mdw1 greenplum_exporter[211676]: time="2023-01-28T19:56:41+08:00" level=error msg="get metrics for scraper:database_size_scraper failed, error:context deadline exceeded" source= Jan 28 19:02:54 mdw1 greenplum_exporter[211676]: time="2023-01-28T19:02:54+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Jan 28 18:05:13 mdw1 greenplum_exporter[211676]: time="2023-01-28T18:05:13+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Jan 28 18:04:24 mdw1 greenplum_exporter[211676]: time="2023-01-28T18:04:24+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Jan 28 15:46:01 mdw1 greenplum_exporter[211676]: time="2023-01-28T15:46:01+08:00" level=error msg="get metrics for scraper:segment_scraper failed, error:pq: canceling statement due to user requ Ja |

问题分析

先在服务器端端执行:

1 | time curl http://127.0.0.1:9297/metrics |

看看能否获取到数据:

1 2 3 4 5 6 7 8 9 10 11 12 | [root@mdw1 greenplum_exporter]# time curl http://127.0.0.1:9297/metrics # HELP greenplum_cluster_active_connections Active connections of GreenPlum cluster at scape time # TYPE greenplum_cluster_active_connections gauge greenplum_cluster_active_connections 273 。。。。。。 省略 # HELP greenplum_up Whether greenPlum cluster is reachable # TYPE greenplum_up gauge greenplum_up 1 real 0m59.924s user 0m0.004s sys 0m0.006s |