合 Greenplum 7 新特性整理

- 新特性总结

- 快速拥有GPDB 7和gpcc 7环境

- 重要的新特性

- 内核

- 自动启用vacuuum

- 支持仅索引扫描和覆盖索引

- 引入监控视图可以监控长运行的操作

- 新增pg_backend_memory_contexts的系统视图

- 增加列不再需要重写表

- 分区表

- 其它

- Example 1: Creating a Range Partitioned Table

- Example 2: Classic Range Partitioned Table

- Example 3: Creating a List Partitioned Table

- Example 4: Classic List Partitioned Table

- Example 5: Creating a Hash Partitioned Table

- Example 6: Adding Hash Partitions

- Example 7: Creating a Composite Partitioned Table

- Example 8: Adding Composite Partitions

- Example 9: Adding Default Partition

- Example 10: Splitting Default Partition

- Example 11: Detaching Partitions

- Example 12: Attaching Existing Table

- Example 13: Checking Partition Information

- Example 14: Getting Partition Statistics

- Example 15: Truncate a Partition

- Example 16: Exchange Partition Data

- Example 17: Applying Constraints on Partitions

- Example 18: Creating an indexed Partitioned Tables

- Example 19: Rename Partitioned Tables

- Example 20: Dropping Partitions

- 支持hash索引

- 支持生成列

- AO表特性

- AO表分析可以更快

- AO表支持唯一索引、唯一约束和主键

- 引入gppkg v2

- 数据库维护

- 性能

- 优化器 Greenplum Query Optimizer (GPORCA)

- AO表 Append-Optimized Tables

- GP7移除的功能

- GP7系统视图

- GP7调优参数

- GP7动态分区裁剪

- 用户或角色不允许以“pg_”开头

- 参考

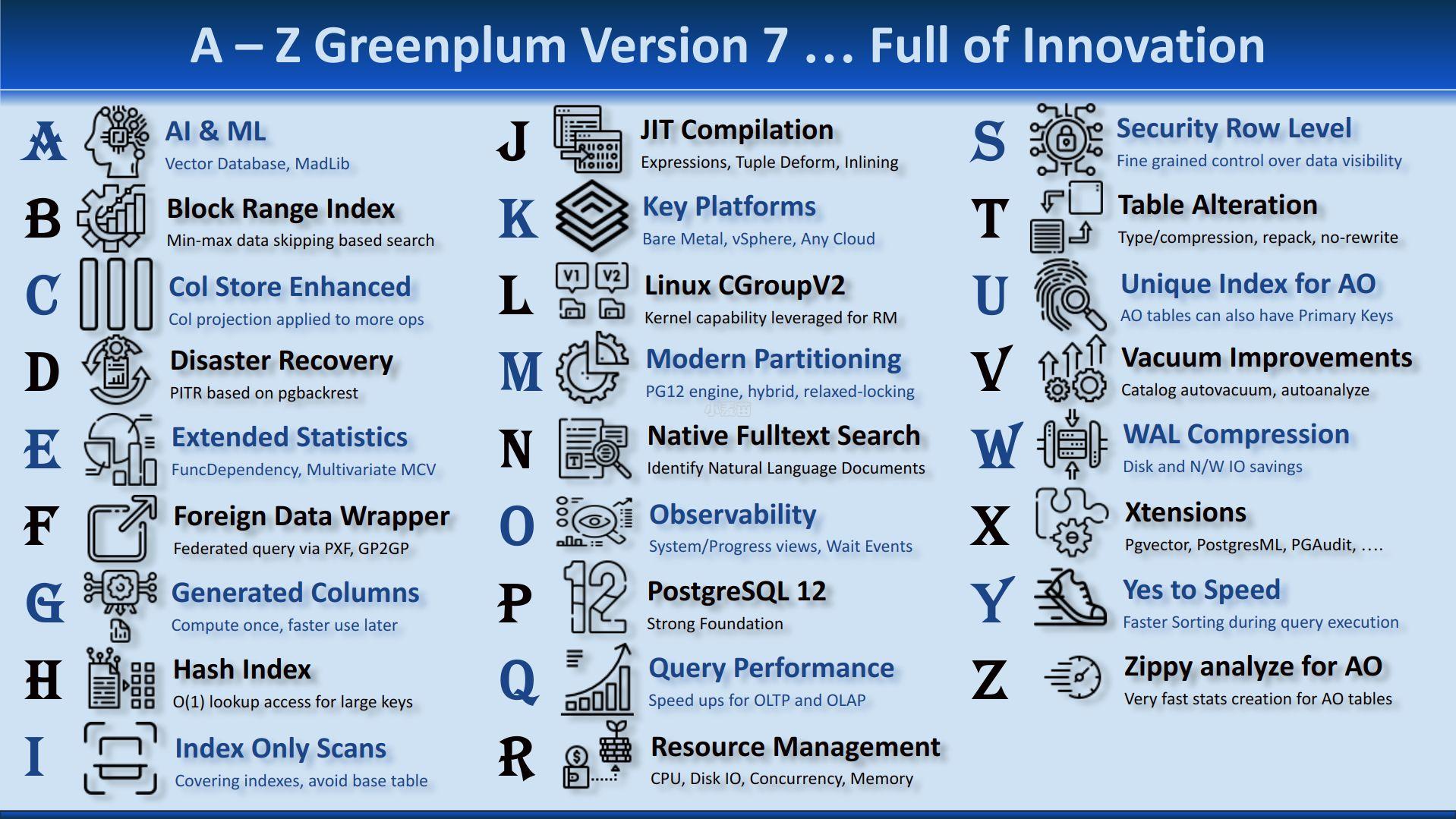

新特性总结

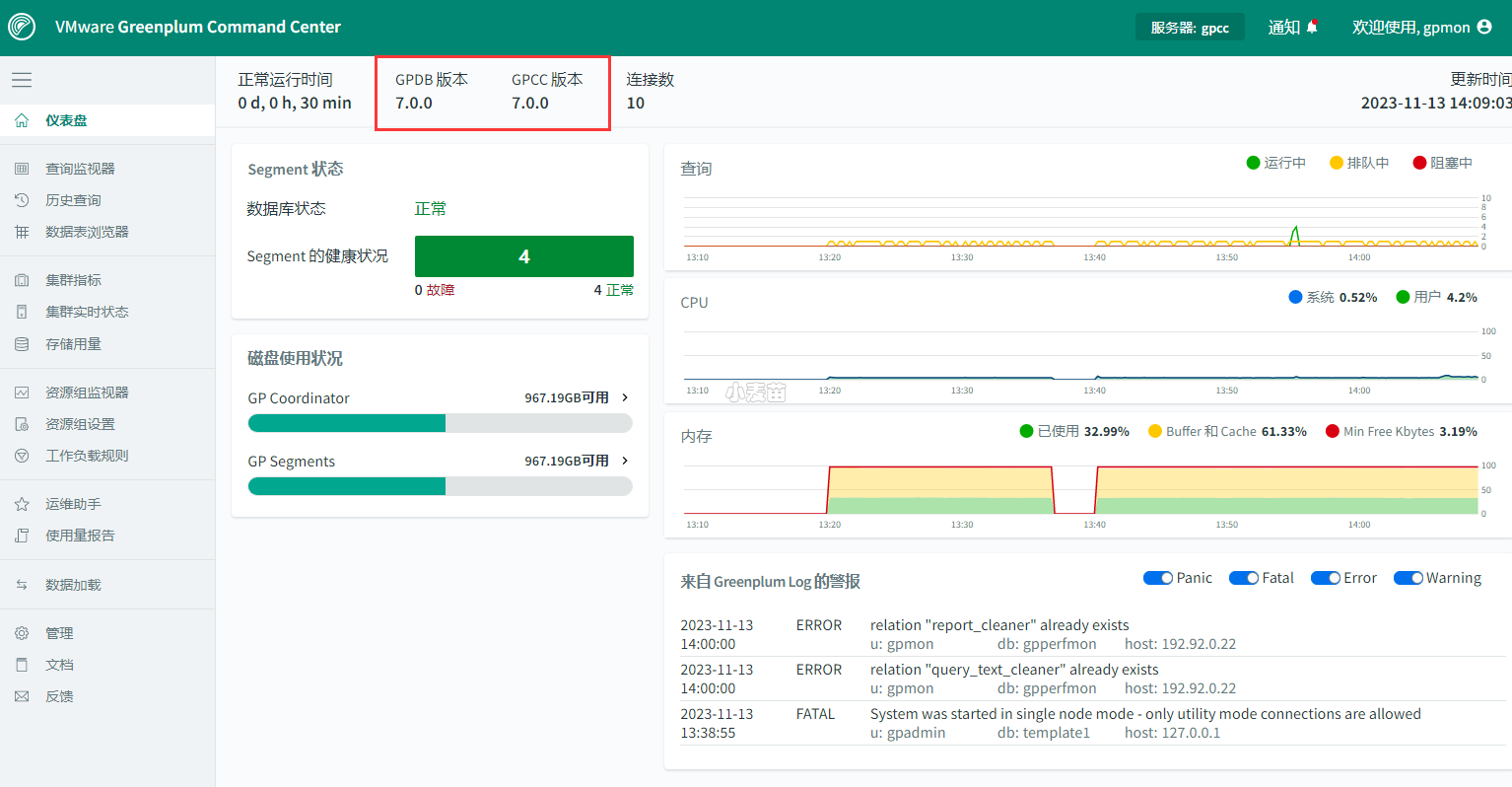

快速拥有GPDB 7和gpcc 7环境

参考:https://www.dbaup.com/zaidockerzhongkuaisutiyangreenplum-7-0-0.html

此docker包括1个master,1个standby master,2个segment,2个mirror实例;还包括gpcc 7.0.0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | docker rm -f gpdb7 docker run -itd --name gpdb7 -h gpdb7 \ -p 5437:5432 -p 28087:28080 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/greenplum:7.0.0_v2 \ /usr/sbin/init docker exec -it gpdb7 bash su - gpadmin gpstart -a gpcc start gpcc status gpstate [gpadmin@gpdb7 ~]$ psql psql (12.12) Type "help" for help. postgres=# select version(); version ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- PostgreSQL 12.12 (Greenplum Database 7.0.0 build commit:0a7a3566873325aca1789ae6f818c80f17a9402d Open Source) on x86_64-pc-linux-gnu, compiled by gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-18), 64-bit compiled on Sep 20 2023 23:29:19 Bhuvnesh C. (1 row) postgres=# select * from gp_segment_configuration ; dbid | content | role | preferred_role | mode | status | port | hostname | address | datadir ------+---------+------+----------------+------+--------+------+----------+---------+-------------------------------------------- 1 | -1 | p | p | n | u | 5432 | gpdb7 | gpdb7 | /opt/greenplum/data/master/gpseg-1 6 | -1 | m | m | s | u | 5433 | gpdb7 | gpdb7 | /opt/greenplum/data/master_standby/gpseg-1 2 | 0 | p | p | n | u | 6000 | gpdb7 | gpdb7 | /opt/greenplum/data/primary/gpseg0 4 | 0 | m | m | n | d | 7000 | gpdb7 | gpdb7 | /opt/greenplum/data/mirror/gpseg0 3 | 1 | p | p | n | u | 6001 | gpdb7 | gpdb7 | /opt/greenplum/data/primary/gpseg1 5 | 1 | m | m | n | d | 7001 | gpdb7 | gpdb7 | /opt/greenplum/data/mirror/gpseg1 (6 rows) |

gpmon/lhr

重要的新特性

内核

Greenplum 7的内核从9.4.26升级到了12.12,其性能、功能均领先于Greenplum 6

自动启用vacuuum

缺省打开了autovacuum,会自动对系统表进行VACUUM和ANALYZE操作,并对用户表进行ANALYZE操作。

INSERT,UPDATE和DELETE影响的数据量大于下面的计算结果时,会自动对操作的表进行ANALYZE操作:

1 2 3 4 | autovacuum_analyze_scale_factor × reltuples + autovacuum_analyze_threshold -- 默认 0.1 * 行数 + 50 |

Automatic Vacuum is now enabled by default for all databases, which automatically performs VACUUM and ANALYZE operations against all catalog tables, as well as runs ANALYZE for all users tables in those databases.

- autovacuum

- autovacuum_analyze_scale_factor

- autovacuum_analyze_threshold

- autovacuum_freeze_max_age

- autovacuum_max_workers

- autovacuum_multixact_freeze_max_age

- autovacuum_naptime

- autovacuum_vacuum_cost_delay

- autovacuum_vacuum_cost_limit

- autovacuum_vacuum_scale_factor

- autovacuum_vacuum_threshold

- gp_autovacuum_scope

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | [gpadmin@gpdb7 ~]$ gpconfig -s autovacuum Values on all segments are consistent GUC : autovacuum Coordinator value: on Segment value: on [gpadmin@gpdb7 ~]$ gpconfig -s autovacuum_analyze_scale_factor Values on all segments are consistent GUC : autovacuum_analyze_scale_factor Coordinator value: 0.1 Segment value: 0.1 [gpadmin@gpdb7 ~]$ [gpadmin@gpdb7 ~]$ gpconfig -s autovacuum_analyze_threshold Values on all segments are consistent GUC : autovacuum_analyze_threshold Coordinator value: 50 Segment value: 50 [gpadmin@gpdb7 ~]$ [gpadmin@gpdb7 ~]$ gpconfig -s autovacuum_max_workers Values on all segments are consistent GUC : autovacuum_max_workers Coordinator value: 3 Segment value: 3 [gpadmin@gpdb7 ~]$ gpconfig -s gp_autovacuum_scope Values on all segments are consistent GUC : gp_autovacuum_scope Coordinator value: catalog Segment value: catalog [gpadmin@gpdb7 ~]$ [gpadmin@gpdb7 ~]$ gpconfig -s autovacuum_naptime Values on all segments are consistent GUC : autovacuum_naptime Coordinator value: 1min Segment value: 1min [gpadmin@gpdb7 ~]$ [gpadmin@gpdb7 ~]$ ps -ef|grep cuum gpadmin 1252 1236 0 12:17 ? 00:00:00 postgres: 6000, autovacuum launcher gpadmin 1259 1241 0 12:17 ? 00:00:00 postgres: 6001, autovacuum launcher gpadmin 1279 1273 0 12:17 ? 00:00:00 postgres: 5432, autovacuum launcher gpadmin 2903 461 0 12:41 pts/1 00:00:00 grep --color=auto cuum [gpadmin@gpdb7 ~]$ gpconfig -c gp_autostats_mode -v on_change gpconfig -c gp_autostats_on_change_threshold -v 100000 |

示例:

1 2 3 4 5 6 7 8 | create table t8 as select * from pg_class limit 5; create table t9 as select * from pg_class limit 50; select * from pg_stat_all_tables where schemaname='public' and relname = 't9'; SELECT * from pg_stats d where d.tablename='t9'; |

支持仅索引扫描和覆盖索引

增加了对覆盖索引(covering index)的支持。覆盖索引允许用户使用INCLUDE 子句将额外的列添加到索引中,对于执行索引 (index-only)扫描非常有用,特别是对于那些不能被B-tree所索引的数据类型。 Greenplum 查询优化器部分支持索引扫描和覆盖索引。

注意:不能使用GitHub上的开源版本,否则不能使用仅索引扫描和覆盖索引!!!

Index-only scans can answer queries from an index alone without accessing the table’s heap, which significantly improves query performance. In addition, covering indexes allow you to add additional columns to an index using the INCLUDE clause, in order to make the use of index-only scans more effective. See Understanding Index-Only Scans and Covering Indexes for more details.

支持Index only的索引扫描,并支持CREATE INDEX的INCLUDE子句,允许在索引查询时不再回表,从而提升索引查询的性能。

测试环境:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | drop table t1; drop table t2; drop table t3; create table t1 (id int, c1 int, c2 int, c3 int, info text, crt_time timestamp); create table t2(like t1); create table t3(like t1); create index idx_t1_1 on t1 (id) include(c1,c2,c3,info,crt_time); create index idx_t2_1 on t2 (id,c1,c2,c3,info,crt_time); create index idx_t3_1 on t3(id); \timing on insert into t1 select (1000*random())::int,1,1,1,'test',now() from generate_series(1,10000000); insert into t2 select (1000*random())::int,1,1,1,'test',now() from generate_series(1,10000000); insert into t3 select (1000*random())::int,1,1,1,'test',now() from generate_series(1,10000000); explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t1 where id=10000; explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t2 where id=10000; explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t3 where id=10000; analyze t1; analyze t2; analyze t3; select id,c1,c2,c3,info,crt_time from t1 where id=1000; select id,c1,c2,c3,info,crt_time from t2 where id=1000; select id,c1,c2,c3,info,crt_time from t3 where id=1000; create table t66(id int PRIMARY key, c1 text, crt_time timestamp); insert into t66 SELECT id, md5(id::text),now() FROM generate_series(1, 2000000) AS id; create index idx_t66_1 on t66 (id) include(c1); explain select id,c1 from t66 where id =100; |

视频:https://www.youtube.com/watch?v=J7_xLoq3E20&t=1383s

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 | db2=# explain select id,c1,c2,c3,info,crt_time from t1 where id=1000; QUERY PLAN -------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..9.82 rows=9883 width=29) -> Index Scan using idx_t1_1 on t1 (cost=0.00..8.26 rows=4942 width=29) Index Cond: (id = 1000) Optimizer: GPORCA (4 rows) Time: 2.374 ms db2=# explain select id,c1,c2,c3,info,crt_time from t2 where id=1000; QUERY PLAN ---------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..14.31 rows=10933 width=29) -> Index Scan using idx_t2_1 on t2 (cost=0.00..12.59 rows=5467 width=29) Index Cond: (id = 1000) Optimizer: GPORCA (4 rows) Time: 3.194 ms db2=# explain select id,c1,c2,c3,info,crt_time from t3 where id=1000; QUERY PLAN -------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..9.40 rows=9775 width=29) -> Index Scan using idx_t3_1 on t3 (cost=0.00..7.86 rows=4888 width=29) Index Cond: (id = 1000) Optimizer: GPORCA (4 rows) Time: 2.755 ms db2=# explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t1 where id=10000; QUERY PLAN --------------------------------------------------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..6.00 rows=1 width=29) (actual time=1.067..1.068 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time -> Index Scan using idx_t1_1 on public.t1 (cost=0.00..6.00 rows=1 width=29) (actual time=0.000..0.778 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time Index Cond: (t1.id = 10000) Optimizer: GPORCA Planning Time: 1.878 ms (slice0) Executor memory: 18K bytes. (slice1) Executor memory: 42K bytes (seg1). Memory used: 128000kB Execution Time: 1.372 ms (11 rows) Time: 3.683 ms db2=# explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t2 where id=10000; QUERY PLAN --------------------------------------------------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..6.00 rows=1 width=29) (actual time=0.308..0.308 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time -> Index Scan using idx_t2_1 on public.t2 (cost=0.00..6.00 rows=1 width=29) (actual time=0.000..0.082 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time Index Cond: (t2.id = 10000) Optimizer: GPORCA Planning Time: 1.940 ms (slice0) Executor memory: 18K bytes. (slice1) Executor memory: 42K bytes (seg1). Memory used: 128000kB Execution Time: 0.550 ms (11 rows) Time: 2.890 ms db2=# explain (analyze,verbose,timing,costs,buffers) select id,c1,c2,c3,info,crt_time from t3 where id=10000; QUERY PLAN --------------------------------------------------------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..6.00 rows=1 width=29) (actual time=0.329..0.330 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time -> Index Scan using idx_t3_1 on public.t3 (cost=0.00..6.00 rows=1 width=29) (actual time=0.000..0.066 rows=0 loops=1) Output: id, c1, c2, c3, info, crt_time Index Cond: (t3.id = 10000) Optimizer: GPORCA Planning Time: 1.971 ms (slice0) Executor memory: 18K bytes. (slice1) Executor memory: 42K bytes (seg1). Memory used: 128000kB Execution Time: 0.626 ms (11 rows) Time: 3.070 ms db2=# db2=# explain select id,c1 from t66 where id =100; QUERY PLAN ----------------------------------------------------------------------------- Gather Motion 1:1 (slice1; segments: 1) (cost=0.00..6.00 rows=1 width=37) -> Index Scan using t66_pkey on t66 (cost=0.00..6.00 rows=1 width=37) Index Cond: (id = 100) Optimizer: GPORCA (4 rows) Time: 3.489 ms |

引入监控视图可以监控长运行的操作

引入了pg_stat_progress_vacuum/copy/create_index等进度视图。

Greenplum Database can report the progress of ANALYZE, CLUSTER, COPY, CREATE INDEX, REINDEX, and VACUUM commands during command execution. Greenplum can also report the progress of a running base backup (initiated during gprecoverseg -F) command invocation, allowing you to monitor the progress of these possibly long-running operations.

- gp_stat_progress_analyze

- gp_stat_progress_basebackup

- gp_stat_progress_cluster

- gp_stat_progress_copy

- gp_stat_progress_create_index

- gp_stat_progress_vacuum

1 2 3 4 5 6 | select * from gp_stat_progress_analyze; select * from gp_stat_progress_basebackup; select * from gp_stat_progress_cluster; select * from gp_stat_progress_copy ; select * from gp_stat_progress_create_index; select * from gp_stat_progress_vacuum ; |

参考:

https://greenplum.org/progress-reporting-views-in-greenplum-7/

https://greenplum.org/partition-in-greenplum-7-recursion-and-inheritance/

新增pg_backend_memory_contexts的系统视图

新增了一个名为pg_backend_memory_contexts的系统视图,可以查看后台的内存使用情况。

1 | SELECT * from pg_backend_memory_contexts d ORDER BY d.total_bytes desc ; |

增加列不再需要重写表

Greenplum Database no longer rewrites the table when a column is added to a table (ALTER TABLE ... ADD COLUMN ...).

ALTER TABLE ... ADD COLUMN ...不再需要重写表:这个功能是PostgreSQL11引入的特性,通过在pg_attribute系统表中增加atthasmissing和attmissingval两个额外字段,所以GP7自然也支持了该功能,可以有效降低加字段所带来的影响。

| Operation | AO Columnar (AOCO) | AO Row (AO) | Heap |

|---|---|---|---|

| DROP COLUMN | No | No | No |

| ALTER COLUMN TYPE [1] | No [2] | Yes | Yes |

| ADD COLUMN (w/ non-volative default [3]) | No [2] | No | No |

| ADD COLUMN (w/ volative default) | No [2] | Yes | Yes |

| ALTER COLUMN SET ENCODING | No [2] | N/A | N/A |

| SET ()[4] | Yes | Yes | Yes |

| SET ACCESS METHOD | Yes | Yes | Yes |

[1] If the new type is not binary coercible with the old. Otherwise, no rewrite for all cases.

[2] But write/rewrite single column data.

[3] Including NULL.

[4] If the options differ from the current table. Otherwise, no rewrite for all cases.

参考:https://greenplum.org/alter-table-in-greenplum-7-avoiding-table-rewrite/

分区表

PostgreSQL declarative table partitioning syntaxis now supported. See About Changes to Table Partitioning in Greenplum 7 for more details.

1 2 3 4 5 6 7 8 | -- Assuming there's long-running insert INSERT INTO sales SELECT * FROM ext_sales_data; -- This will be blocked ALTER TABLE sales ADD PARTITION march_sales START ('2023-03-01') END ('2023-04-01'); -- This will go through ALTER TABLE sales ATTACH PARTITION march_sales FOR VALUES FROM ('2023-03-01') TO ('2023-04-01'); -- 这种方式添加分区不会被阻塞 |

分区表删除的视图:

1 2 3 4 5 | select * from pg_partition_columns; select * from pg_partition_encoding; select * from pg_partition_rule; select * from pg_partition_template; select * from pg_stat_partition_operation; |

参考:

https://greenplum.org/20-examples-of-greenplum-partition-commands/

https://greenplum.org/partition-in-greenplum-7-whats-new/

https://greenplum.org/partition-in-greenplum-7-recursion-and-inheritance/

其它

创建分区表

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | --GBDP7版本可以先只定义父分区表,不定义子分区表 postgres=# CREATE TABLE sales (id int, date date, amt decimal(10,2)) postgres-# DISTRIBUTED BY (id) postgres-# PARTITION BY RANGE (date); CREATE TABLE --GBDP7版本也可以定义父分区表的同时定义子分区表(保留了以往的经典语法) postgres=# drop table sales ; DROP TABLE postgres=# CREATE TABLE sales (id int, date date, amt decimal(10,2)) postgres-# DISTRIBUTED BY (id) postgres-# PARTITION BY RANGE (date) postgres-# (PARTITION jan_sales START ('2023-01-01') END ('2023-02-01'), postgres(# PARTITION feb_sales START ('2023-02-01') END ('2023-03-01'), postgres(# DEFAULT PARTITION other_sales); CREATE TABLE --GPDB6版本创建分区表时则不能只定义父分区表,否则会报错 postgres=# CREATE TABLE sales (id int, date date, amt decimal(10,2)) postgres-# DISTRIBUTED BY (id) postgres-# PARTITION BY RANGE (date); ERROR: no partitions specified at depth 1 LINE 3: PARTITION BY RANGE (date); |

添加新的子分区

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | --GPDB7版本创建新表并将其添加为新的子分区有两种语法,一个是使用FOR VALUES FROM ... TO ,一个是...START ... END () postgres=# CREATE TABLE Mar_sales PARTITION OF sales FOR VALUES FROM ('2023-03-01') TO ('2023-04-01'); NOTICE: table has parent, setting distribution columns to match parent table CREATE TABLE postgres=# ALTER TABLE sales ADD PARTITION Apr_sales START ('2023-04-01') END ('2023-04-30'); ALTER TABLE --GPDB6版本添加新的子分区则只有使用...START ... END (),使用FOR VALUES FROM ... TO 则报错 postgres=# ALTER TABLE sales ADD PARTITION Apr_sales postgres-# START ('2023-04-01') END ('2023-04-30'); NOTICE: CREATE TABLE will create partition "sales_1_prt_apr_sales" for table "sales" ALTER TABLE postgres=# ALTER TABLE sales ADD PARTITION Apr_sales postgres-# FOR VALUES FROM ('2023-04-01') TO ('2023-04-30'); ERROR: syntax error at or near "FOR" LINE 2: FOR VALUES FROM ('2023-04-01') TO ('2023-04-30'); --GPDB7版本将现有表添加为新的子分区 postgres=# CREATE TABLE Apr_sales (LIKE sales); NOTICE: table doesn't have 'DISTRIBUTED BY' clause, defaulting to distribution columns from LIKE table CREATE TABLE postgres=# ALTER TABLE sales ATTACH PARTITION Apr_sales FOR VALUES FROM ('2024-04-01') TO ('2024-05-01'); ALTER TABLE --GPDB6版本则不支持该语法 postgres=# ALTER TABLE sales ATTACH PARTITION Apr_sales FOR VALUES FROM ('2024-04-01') TO ('2024-05-01'); ERROR: syntax error at or near "ATTACH" LINE 1: ALTER TABLE sales ATTACH PARTITION Apr_sales FOR VALUES FRO... |

删除子分区

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | --GPDB7版本除使用DROP PARTITION外,还可以使用DETACH PARTITION postgres=# alter table sales DROP PARTITION other_sales; ALTER TABLE postgres=# ALTER TABLE sales DETACH PARTITION Mar_sales; ALTER TABLE --!!!需要注意的是,使用DROP PARTITION是将分区表直接删除,而DETACH PARTITION只是将分区表从分区结构中删除,但表任然在数据库中** postgres=# \d+ sales Partitioned table "public.sales" Column | Type | Collation | Nullable | Default | Storage | Stats target | Description --------+---------------+-----------+----------+---------+---------+--------------+------------- id | integer | | | | plain | | date | date | | | | plain | | amt | numeric(10,2) | | | | main | | Partition key: RANGE (date) Partitions: sales_1_prt_feb_sales FOR VALUES FROM ('2023-02-01') TO ('2023-03-01'), sales_1_prt_jan_sales FOR VALUES FROM ('2023-01-01') TO ('2023-02-01') Distributed by: (id) Access method: heap postgres=# \d other_sales Did not find any relation named "other_sales". postgres=# \d mar_sales Table "public.mar_sales" Column | Type | Collation | Nullable | Default --------+---------------+-----------+----------+--------- id | integer | | | date | date | | | amt | numeric(10,2) | | | Distributed by: (id) --GPDB6版本只能使用DROP PARTITION postgres=# ALTER TABLE sales DROP PARTITION Apr_sales ; ALTER TABLE postgres=# ALTER TABLE sales DETACH PARTITION Mar_sales; ERROR: syntax error at or near "DETACH" LINE 1: ALTER TABLE sales DETACH PARTITION Mar_sales; |

将现有分区与另一个表交换

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 | --GPDB6和GPDB7都先创建一个常规表 postgres=# create table test (like sales); NOTICE: table doesn't have 'DISTRIBUTED BY' clause, defaulting to distribution columns from LIKE table CREATE TABLE --GPDB6查看当前分区情况 postgres=# \d+ sales Table "public.sales" Column | Type | Modifiers | Storage | Stats target | Description --------+---------------+-----------+---------+--------------+------------- id | integer | | plain | | date | date | | plain | | amt | numeric(10,2) | | main | | Child tables: sales_1_prt_feb_sales, sales_1_prt_jan_sales Distributed by: (id) Partition by: (date) --GPDB6将现有分区feb_sales替换为test postgres=# ALTER TABLE sales EXCHANGE PARTITION feb_sales WITH TABLE test; ALTER TABLE --GPDB6再次查看分区情况时,可以看到仍和原来一样 postgres=# \d+ sales Table "public.sales" Column | Type | Modifiers | Storage | Stats target | Description --------+---------------+-----------+---------+--------------+------------- id | integer | | plain | | date | date | | plain | | amt | numeric(10,2) | | main | | Child tables: sales_1_prt_feb_sales, sales_1_prt_jan_sales Distributed by: (id) Partition by: (date) --GPDB7查看当前分区情况 postgres=# \d+ sales Partitioned table "public.sales" Column | Type | Collation | Nullable | Default | Storage | Stats target | Description --------+---------------+-----------+----------+---------+---------+--------------+------------- id | integer | | | | plain | | date | date | | | | plain | | amt | numeric(10,2) | | | | main | | Partition key: RANGE (date) Partitions: sales_1_prt_feb_sales FOR VALUES FROM ('2023-02-01') TO ('2023-03-01'), sales_1_prt_sales_1_prt_jan_sales FOR VALUES FROM ('2023-01-01') TO ('2023-01-31') Distributed by: (id) Access method: heap --除去和GPDB6一样的可以用EXCHANGE PARTITION外,还可以使用DETACH PARTITION和 ATTACH PARTITION postgres=# ALTER TABLE sales DETACH PARTITION sales_1_prt_feb_sales; ALTER TABLE postgres=# ALTER TABLE sales ATTACH PARTITION test FOR VALUES FROM ('2023-02-01') TO ('2023-03-01'); ALTER TABLE --再次查看分区情况时可以发现sales_1_prt_feb_sales已经替换为test了。 postgres=# \d+ sales Partitioned table "public.sales" Column | Type | Collation | Nullable | Default | Storage | Stats target | Description --------+---------------+-----------+----------+---------+---------+--------------+------------- id | integer | | | | plain | | date | date | | | | plain | | amt | numeric(10,2) | | | | main | | Partition key: RANGE (date) Partitions: sales_1_prt_sales_1_prt_jan_sales FOR VALUES FROM ('2023-01-01') TO ('2023-01-31'), test FOR VALUES FROM ('2023-02-01') TO ('2023-03-01') Distributed by: (id) Access method: heap |

!!!! 这里需要注意的是分区名和表名的区别,使用旧语法创建或者添加子分区的情况下会添加指定的前缀,例如:sales*1_prt_feb_sales中sales_1_prt*是前缀,sales_1_prt_feb_sales是分区名,而表名则是feb_sales 。而在新语法中则是将指定为表名的名称视为表名,没有前缀,例如分区表test。以上例子中sales_1_prt_sales_1_prt_jan_sales和test是分别用新旧两种语法创建的分区表,虽然同为sales的分区表,但是很难将两者联系到一起。因此,建议对于同一分区表,尽量使用相同的新语法或旧语法,以避免名称歧义。

虽然新语法的专业性较低,但一旦熟悉了用法,它们的通用性将能够更轻松地实现更复杂的层次结构。当然如果习惯了旧语法,GPDB7也是兼容的,可以继续使用。

新旧语法比较表

| 用例 | 旧语法 | 新语法 |

|---|---|---|

| 与父分区一起创建子分区 | CREATE TABLE … (PARTITION …) | CREATE TABLE … PARTITION BY和 CREATE TABLE … PARTITION OF |

| 创建和添加分区 | ALTER TABLE … ADD PARTITION | CREATE TABLE … PARTITION OF |

| 将现有分区子级与常规表交换 | ALTER TABLE … EXCHANGE PARTITION | ALTER TABLE … DETACH PARTITION和 ATTACH PARTITION |

| 删除分区 | ALTER TABLE … DROP PARTITION | DROP TABLE |

| 拆分分区 | ALTER TABLE … SPLIT PARTITION | DETACH 分区和 新分区分开ATTACH |

在GPDB7版本中,不再支持多列分区(GPDB6版本也不支持)

1 2 3 4 | postgres=# CREATE TABLE foo (a int, b int, c int) PARTITION BY list (b,c); NOTICE: Table doesn't have 'DISTRIBUTED BY' clause -- Using column named 'a' as the Greenplum Database data distribution key for this table. HINT: The 'DISTRIBUTED BY' clause determines the distribution of data. Make sure column(s) chosen are the optimal data distribution key to minimize skew. ERROR: cannot use "list" partition strategy with more than one column |

但可以创建一个复合类型并将其用作分区键(GPDB6版本不支持)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | postgres=# CREATE TYPE partkey as (b int, c int); CREATE TYPE postgres=# CREATE TABLE foo (a int, b int, c int) postgres-# PARTITION BY LIST ((row(b, c)::partkey)); NOTICE: Table doesn't have 'DISTRIBUTED BY' clause -- Using column named 'a' as the Greenplum Database data distribution key for this table. HINT: The 'DISTRIBUTED BY' clause determines the distribution of data. Make sure column(s) chosen are the optimal data distribution key to minimize skew. CREATE TABLE postgres=# \d+ foo Partitioned table "public.foo" Column | Type | Collation | Nullable | Default | Storage | Stats target | Description --------+---------+-----------+----------+---------+---------+--------------+------------- a | integer | | | | plain | | b | integer | | | | plain | | c | integer | | | | plain | | Partition key: LIST ((ROW(b, c)::partkey)) Number of partitions: 0 Distributed by: (a) Access method: heap |

另外和分区表相关的更新不止是SQL语法上的更新,虽然GPDB7是基于PostgreSQL12.12版本,但是pg_dump命令是和PostgreSQL最新版本pg_dump中的选项一样是可以支持父表和分区表同时转储的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 | [gpadmin@gp7_rh8_mas201 ~]$ pg_dump --version pg_dump (PostgreSQL) 12.12 [gpadmin@gp7_rh8_mas201 ~]$ pg_dump --help |grep children --exclude-table-and-children=PATTERN --exclude-table-data-and-children=PATTERN --table-and-children=PATTERN dump only the specified table(s), [gpadmin@gp7_rh8_mas201 ~]$ pg_dump --table-and-children=sales -f 20231116.sql [gpadmin@gp7_rh8_mas201 ~]$ more 20231116.sql -- -- Greenplum Database database dump -- -- Dumped from database version 12.12 -- Dumped by pg_dump version 12.12 SET gp_default_storage_options = ''; SET statement_timeout = 0; SET lock_timeout = 0; SET idle_in_transaction_session_timeout = 0; SET client_encoding = 'UTF8'; SET standard_conforming_strings = on; SELECT pg_catalog.set_config('search_path', '', false); SET check_function_bodies = false; SET xmloption = content; SET client_min_messages = warning; SET row_security = off; SET default_tablespace = ''; SET default_table_access_method = heap; -- -- Name: sales; Type: TABLE; Schema: public; Owner: gpadmin -- CREATE TABLE public.sales ( id integer, date date, amt numeric(10,2) ) PARTITION BY RANGE (date) DISTRIBUTED BY (id); ALTER TABLE public.sales OWNER TO gpadmin; -- -- Name: sales_1_prt_sales_1_prt_jan_sales; Type: TABLE; Schema: public; Owner: gpadmin -- CREATE TABLE public.sales_1_prt_sales_1_prt_jan_sales ( id integer, date date, amt numeric(10,2) ) DISTRIBUTED BY (id); ALTER TABLE public.sales_1_prt_sales_1_prt_jan_sales OWNER TO gpadmin; -- -- Name: test; Type: TABLE; Schema: public; Owner: gpadmin -- CREATE TABLE public.test ( id integer, date date, amt numeric(10,2) ) DISTRIBUTED BY (id); ALTER TABLE public.test OWNER TO gpadmin; -- -- Name: sales_1_prt_sales_1_prt_jan_sales; Type: TABLE ATTACH; Schema: public; Owner: gpadmin -- ALTER TABLE ONLY public.sales ATTACH PARTITION public.sales_1_prt_sales_1_prt_jan_sales FOR VALUES FROM ('2023-0 1-01') TO ('2023-01-31'); -- -- Name: test; Type: TABLE ATTACH; Schema: public; Owner: gpadmin -- ALTER TABLE ONLY public.sales ATTACH PARTITION public.test FOR VALUES FROM ('2023-02-01') TO ('2023-03-01'); -- -- Data for Name: sales_1_prt_sales_1_prt_jan_sales; Type: TABLE DATA; Schema: public; Owner: gpadmin -- COPY public.sales_1_prt_sales_1_prt_jan_sales (id, date, amt) FROM stdin; \. -- -- Data for Name: test; Type: TABLE DATA; Schema: public; Owner: gpadmin -- COPY public.test (id, date, amt) FROM stdin; \. -- -- Greenplum Database database dump complete -- --从文件内容可以看到父表和子表都全部转储。 |

Example 1: Creating a Range Partitioned Table

This example creates a sales table partitioned by range using the sale_date column.

1 2 3 4 5 6 | -- (GP7 only) CREATE TABLE sales( sale_id SERIAL, sale_date DATE, amount DECIMAL ) PARTITION BY RANGE (sale_date); |

Then adds a new partition to the sales table for the first half of 2023.

1 2 3 | -- (GP7 only) CREATE TABLE sales_p1 PARTITION OF sales FOR VALUES FROM ('2023-01-01') TO ('2023-06-30'); |

Example 2: Classic Range Partitioned Table

For convenience, a single command can be used.

1 2 3 4 5 6 7 8 | CREATE TABLE sales( sale_id SERIAL, sale_date DATE, amount DECIMAL ) PARTITION BY RANGE (sale_date) ( PARTITION p1 START ('2023-01-01') END ('2023-06-30') ); |