原 GreenPlum 7.3.0新特性介绍

简介

GreenPlum 7.0.0于2023-09-28发布,大约半年后,GreenPlum 7.1.0于2024-02-09发布,GreenPlum 7.2.0于2024-06-20发布,GreenPlum 7.3.0于2024-08-02发布。

在本文中,麦老师就其中一些比较实用的新特性做一些简单说明。

GreenPlum 7.3.0环境准备

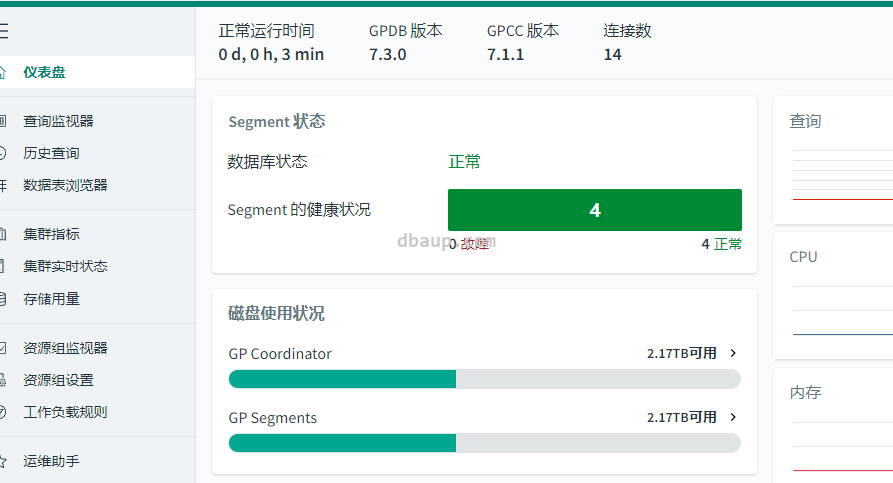

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | -- docker pull lhrbest/greenplum:7.3.0 docker rm -f gpdb73 docker run -itd --name gpdb73 -h gpdb7 \ -p 5438:5432 -p 28287:28080 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/greenplum:7.3.0 \ /usr/sbin/init docker exec -it gpdb73 bash su - gpadmin gpstart -a gpcc start gpcc status gpstate |

此docker包括1个master,1个standby master,2个segment,2个mirror实例;还包括gpcc 7.1.1

CDC功能

VMware Greenplum 7.3.0 now supports logical decoding, allowing Greenplum Database to be used as a source database in the Greenplum Change Data Capture solution.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | [gpadmin@gpdb7 ~]$ gpconfig -s wal_level Values on all segments are consistent GUC : wal_level Coordinator value: replica Segment value: replica [gpadmin@gpdb7 ~]$ gpconfig -c wal_level -v logical 20240807:09:40:38:004858 gpconfig:gpdb7:gpadmin-[INFO]:-completed successfully with parameters '-c wal_level -v logical' [gpadmin@gpdb7 ~]$ [gpadmin@gpdb7 ~]$ gpstop -M fast -ar 20240807:09:40:44:004951 gpstop:gpdb7:gpadmin-[INFO]:-Starting gpstop with args: -M fast -ar 20240807:09:40:44:004951 gpstop:gpdb7:gpadmin-[INFO]:-Gathering information and validating the environment... 20240807:09:40:44:004951 gpstop:gpdb7:gpadmin-[INFO]:-Obtaining Greenplum Coordinator catalog information 20240807:09:40:44:004951 gpstop:gpdb7:gpadmin-[INFO]:-Obtaining Segment details from coordinator... 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 7.3.0 build commit:ebf6c7db0034f8928f20252a306c4ecdb9324d81' 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Commencing Coordinator instance shutdown with mode='fast' 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Coordinator segment instance directory=/opt/greenplum/data/master/gpseg-1 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Attempting forceful termination of any leftover coordinator process 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Terminating processes for segment /opt/greenplum/data/master/gpseg-1 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Stopping coordinator standby host gpdb7 mode=fast 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Successfully shutdown standby process on gpdb7 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Targeting dbid [2, 4, 3, 5] for shutdown 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-Commencing parallel primary segment instance shutdown, please wait... 20240807:09:40:45:004951 gpstop:gpdb7:gpadmin-[INFO]:-0.00% of jobs completed 20240807:09:40:48:004951 gpstop:gpdb7:gpadmin-[INFO]:-100.00% of jobs completed 20240807:09:40:48:004951 gpstop:gpdb7:gpadmin-[INFO]:-Commencing parallel mirror segment instance shutdown, please wait... 20240807:09:40:48:004951 gpstop:gpdb7:gpadmin-[INFO]:-0.00% of jobs completed 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:-100.00% of jobs completed 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:----------------------------------------------------- 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:- Segments stopped successfully = 4 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:- Segments with errors during stop = 0 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:----------------------------------------------------- 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:-Successfully shutdown 4 of 4 segment instances 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:-Database successfully shutdown with no errors reported 20240807:09:40:50:004951 gpstop:gpdb7:gpadmin-[INFO]:-Restarting System... [gpadmin@gpdb7 ~]$ gpconfig -s wal_level Values on all segments are consistent GUC : wal_level Coordinator value: logical Segment value: logical [gpadmin@gpdb7 ~]$ |

GP7之前:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | [gpadmin@gpdb6270 ~]$ gpconfig -c wal_level -v logical 20240807:09:42:29:094409 gpconfig:gpdb6270:gpadmin-[CRITICAL]:-GUC Validation Failed: wal_level cannot be changed under normal conditions. Please refer to gpconfig documentation. GUC Validation Failed: wal_level cannot be changed under normal conditions. Please refer to gpconfig documentation. [gpadmin@gpdb6270 ~]$ [gpadmin@gpdb6270 ~]$ gpconfig -c wal_level -v logical --skipvalidation 20240807:09:42:37:094428 gpconfig:gpdb6270:gpadmin-[INFO]:-completed successfully with parameters '-c wal_level -v logical --skipvalidation' [gpadmin@gpdb6270 ~]$ [gpadmin@gpdb6270 ~]$ [gpadmin@gpdb6270 ~]$ gpstop -M fast -ar 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Starting gpstop with args: -M fast -ar 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Gathering information and validating the environment... 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Obtaining Segment details from master... 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.27.0 build commit:482d4e839d519dcfde9cddf03807787d9d91d015' 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Commencing Master instance shutdown with mode='fast' 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Master segment instance directory=/opt/greenplum/data/master/gpseg-1 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process 20240807:09:42:44:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Terminating processes for segment /opt/greenplum/data/master/gpseg-1 20240807:09:42:47:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Stopping master standby host localhost mode=fast 20240807:09:42:47:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Successfully shutdown standby process on localhost 20240807:09:42:47:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Targeting dbid [2, 4, 3, 5] for shutdown 20240807:09:42:47:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Commencing parallel primary segment instance shutdown, please wait... 20240807:09:42:47:094712 gpstop:gpdb6270:gpadmin-[INFO]:-0.00% of jobs completed 20240807:09:42:49:094712 gpstop:gpdb6270:gpadmin-[INFO]:-100.00% of jobs completed 20240807:09:42:49:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Commencing parallel mirror segment instance shutdown, please wait... 20240807:09:42:49:094712 gpstop:gpdb6270:gpadmin-[INFO]:-0.00% of jobs completed 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-100.00% of jobs completed 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:----------------------------------------------------- 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:- Segments stopped successfully = 4 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:- Segments with errors during stop = 0 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:----------------------------------------------------- 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Successfully shutdown 4 of 4 segment instances 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Database successfully shutdown with no errors reported 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Cleaning up leftover gpmmon process 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-No leftover gpmmon process found 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Cleaning up leftover gpsmon processes 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-No leftover gpsmon processes on some hosts. not attempting forceful termination on these hosts 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Cleaning up leftover shared memory 20240807:09:42:51:094712 gpstop:gpdb6270:gpadmin-[INFO]:-Restarting System... [gpadmin@gpdb6270 ~]$ psql psql (9.4.26) Type "help" for help. postgres=# \q [gpadmin@gpdb6270 ~]$ [gpadmin@gpdb6270 ~]$ gpconfig -s wal_level Values on all segments are consistent GUC : wal_level Master value: logical Segment value: logical [gpadmin@gpdb6270 ~]$ |

主GreenPlum创建发布:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | postgres=# create database db1; CREATE DATABASE postgres=# \c db1; You are now connected to database "db1" as user "gpadmin". db1=# db1=# grant connect on database postgres to repuser; GRANT db1=# grant USAGE on SCHEMA public to repuser; GRANT db1=# alter user repuser superuser; ALTER ROLE db1=# db1=# db1=# create table test(id int4 primary key ,name text); \d+ test; CREATE TABLE db1=# insert into test values (1,'a'); INSERT 0 1 db1=# \d+ test; Table "public.test" Column | Type | Collation | Nullable | Default | Storage | Stats target | Description --------+---------+-----------+----------+---------+----------+--------------+------------- id | integer | | not null | | plain | | name | text | | | | extended | | Indexes: "test_pkey" PRIMARY KEY, btree (id) Distributed by: (id) Access method: heap db1=# db1=# create PUBLICATION pub1 FOR TABLE test; CREATE PUBLICATION db1=# select * from pg_publication; oid | pubname | pubowner | puballtables | pubinsert | pubupdate | pubdelete | pubtruncate -------+---------+----------+--------------+-----------+-----------+-----------+------------- 19329 | pub1 | 10 | f | t | t | t | t (1 row) |

容灾GreenPlum、或容灾PG端 创建订阅都报错:

1 2 3 4 5 | postgres=# \c db1 You are now connected to database "db1" as user "gpadmin". db1=# create subscription sub1 connection 'host=192.92.0.52 port=5432 dbname=db1 user=repuser password=lhr' publication pub1; ERROR: could not connect to the publisher: FATAL: System was started in single node mode - only utility mode connections are allowed (subscriptioncmds.c:465) db1=# |

找了官方文档并没有发现如何配置cdc。

中文翻译

VMware Greenplum 7.3.0 是一个小版本更新,包含新功能和更改,并解决了多个问题。

新功能

VMware Greenplum 7.3.0 引入了 Greenplum 自动化机器学习代理 (gpMLBot),这是一个命令行界面,帮助用户使用 Apache MADlib 和 PostgresML 进行自动数据处理、超参数优化和模型管理。

VMware Greenplum 7.3.0 引入了 gp_toolkit 中的 CPU 核心计数功能。该功能包括一个 UDF(__gp_get_num_logical_cores)和三个视图(gp_toolkit.gp_num_physical_cores、gp_toolkit.gp_num_physical_cores_segments 和 gp_toolkit.gp_num_physical_cores_per_host)。此功能设计用于 Linux,允许超级用户统计 Greenplum 集群中的物理核心数量,包括段主机和各个主机。

增强功能

VMware Greenplum 7.3.0 现在支持 orafce_ext 的依赖扩展的自动创建。运行 CREATE EXTENSION orafce_ext CASCADE; 命令时,将自动创建控制文件中声明的依赖扩展。

VMware Greenplum 7.3.0 现在支持逻辑解码,允许将 Greenplum 数据库用作 Greenplum 数据捕获解决方案中的源数据库。

VMware Greenplum 7.3.0 现在在以下输入配置文件中支持 hostname 参数:

- gpaddmirrors

- gpmovemirrors

VMware Greenplum 7.3.0 通过以下方式改进了 gpexpand.status_detail 的 UPDATE 性能:

- 仅使用协调器分发。

- 在表上创建 Btree 索引。

- 减少用于更新 gpexpand.status_detail 的连接总数,以匹配并行作业数。

VMware Greenplum 7.3.0 优化了空闲进程的管理,以提高性能。

VMware Greenplum 7.3.0 现在支持在名称中包含换行符或逗号的表上使用 analyzedb。

VMware Greenplum 7.3.0 现在支持查看重新分发失败的表和已删除的表:

- 重新分发失败:重新分发失败的表在 gpexpand 状态表和视图中进行跟踪。

- 已删除的表:自设置阶段以来删除的表在 gpexpand.expansion_progress 视图中进行跟踪。

更新的库

- Greenplum 包实用程序 gppkg 已更新至版本 2.2.1。

解决的问题

服务器

- 35507450 解决了从非空、零列追加优化表中选择时可能导致分段错误的问题。

- N/A 解决了同时使用位图和其他索引时发生的查询结果错误。

- N/A 解决了 gp_interconnect_address_type=wildcard 无法正常工作的问题。

- N/A 解决了计划器为使用 aggfilter 的 DQA 生成错误计划的问题。

- N/A 解决了处理大 gxid 值时 waitGxids 的问题。

- N/A 解决了由 initplan 函数引起的文件泄漏问题。

- N/A 解决了 DistributedTransactionId 到 TransactionId 转换在 UDF gp_distributed_xid() 以及视图 gp_distributed_log 和 gp_distributed_xacts 中引起不正确值的问题。

- N/A 解决了 pg_resqueue 中 active_statements 设置值错误的问题。

- N/A 解决了 TRUNCATE 命令产生的尝试更新不可见元组的错误。

- N/A 解决了 ALTER DATABASE/ROLE ... SET gp_default_storage_options 在各段之间不一致的问题。

- N/A 解决了在非哈希列上执行 EXCEPT 操作时发生的错误。

查询处理

- 35258690 解决了当数据库 LC_COLLATE 和 LC_CTYPE 设置为 C 时 Orca 无法正确处理中文字符的问题。

- 35507324 解决了 Orca 在调用 percentile_cont 和 percentile_disc 时传递引用数据类型(如 numeric 和 interval)崩溃的问题。

- N/A 解决了 Orca 的 libpq 接口 VIEW 返回不准确 resorigtbl 元数据的问题。

- N/A 解决了 H3 扩展版本函数错误显示版本为未发布而非正确已发布版本的问题。

全部新特性原文

Release 7.3.0

Release Date: 2024-08-02

VMware Greenplum 7.3.0 is a minor release that includes new and changed features and resolves several issues.

New Features

- VMware Greenplum 7.3.0 introduces the Greenplum Automated Machine Learning Agent (gpMLBot), a command-line interface to assist users in utilizing Apache MADlib and PostgresML for automated data processing, hyperparameter optimization, and model management.

- VMware Greenplum 7.3.0 introduces a feature for counting CPU cores in

gp_toolkit. This includes one UDF (__gp_get_num_logical_cores) and three views (gp_toolkit.gp_num_physical_cores,gp_toolkit.gp_num_physical_cores_segments, andgp_toolkit.gp_num_physical_cores_per_host). This feature, designed for Linux, allows super users to count physical cores across the Greenplum cluster, including segment hosts and individual hosts.

Enhancements

- VMware Greenplum 7.3.0 now supports automatic creation of dependent extensions for

orafce_ext. Running theCREATE EXTENSION orafce_ext CASCADE;command automatically creates the dependent extensions as declared in the control file. - VMware Greenplum 7.3.0 now supports logical decoding, allowing Greenplum Database to be used as a source database in the Greenplum Change Data Capture solution.

- VMware Greenplum 7.3.0 now supports the hostname parameter in the following input configuration files:

gpaddmirrorsgpmovemirrors

- VMware Greenplum 7.3.0 improves

UPDATEperformance forgpexpand.status_detailby:- Using coordinator-only distribution.

- Creating a Btree index on the table.

- Reducing the overall number of connections for updating

gpexpand.status_detailto match the number of parallel jobs.

- VMware Greenplum 7.3.0 optimizes the management of idle processes to improve performance.

- VMware Greenplum 7.3.0 now supports the use of analyzedb on tables containing newline or comma characters in their names.

- VMware Greenplum 7.3.0 now supports viewing tables that failed to redistribute and tables that have been dropped:

- Failed Redistribution: Tables that failed to redistribute are tracked in the

gpexpandstatus tables and views. - Dropped Tables: Tables dropped since the setup phase are tracked in the

gpexpand.expansion_progressview.

- Failed Redistribution: Tables that failed to redistribute are tracked in the

Updated Libraries

- The Greenplum package utility

gppkghas been updated to version 2.2.1.

Resolved Issues

Server

35507450

Resolves an issue where selecting from non-empty, zero-column append-optimized table could cause a segmentation fault.

N/A

本人提供Oracle(OCP、OCM)、MySQL(OCP)、PostgreSQL(PGCA、PGCE、PGCM)等数据库的培训和考证业务,私聊QQ646634621或微信dbaup66,谢谢!